The Value of Human Touch in Healthcare AI: Is It Important?

Healthcare stands at a fascinating crossroads. On one side, we have AI systems that can diagnose diseases with unprecedented accuracy, automate complex workflows, and process millions of data points in seconds.

On the other hand, we have patients who still crave understanding, compassion, and the reassuring presence of someone who truly listens. The question isn't whether AI belongs in healthcare; it clearly does. The real question is how we design these systems to amplify rather than replace the human elements that make healthcare truly healing.

Why Perfect Systems Create Imperfect Experiences

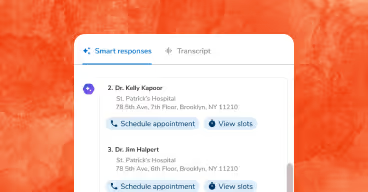

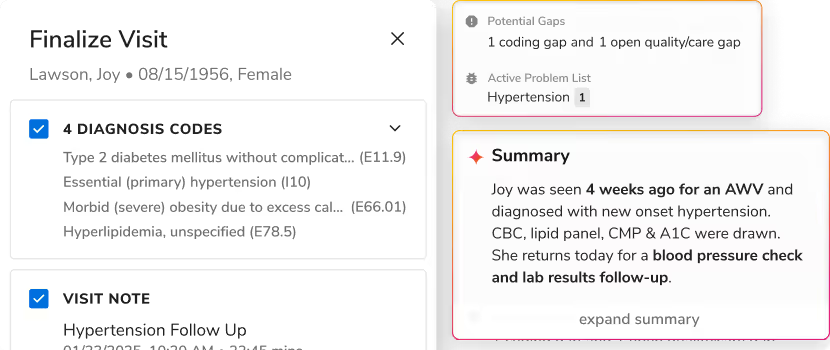

If you were to go into any modern healthcare environment, you would likely find AI in some form, from chatbots that help schedule appointments to algorithms that help predict when patients will deteriorate. These tools have increased efficiency, reflected in a decrease in wait times. In addition, the burden of task completion for ancillary staff has decreased, as well as improved diagnostic confidence.

However, there is a curious phenomenon that has emerged. As we have improved the efficiency in healthcare, patient satisfaction scores have not always improved. Research suggests patients continue to rate "being heard" and the degree of emotional support above efficiency. These are also the types of needs that no algorithm can generate. This is not a failure of the technology; it is a reminder that healthcare is fundamentally a human interaction.

The most successful healthcare organizations recognize the paradox. They use AI not to replace human interaction but as a mechanism to enhance it. With AI managing or constructing the building blocks of human interaction, such as verifying insurance or sending reminders for appointments, the clinician gains back precious minutes to sit with patients and ask, "How are you really doing?"

How AI Creates Time for What Matters Most

The most advanced organizations that use healthcare technology have learned something remarkable: AI does not detract from human compassion, and it can even serve to enhance compassion by offloading data-heavy activities from healthcare workers, allowing them to focus on connection, comfort, and care.

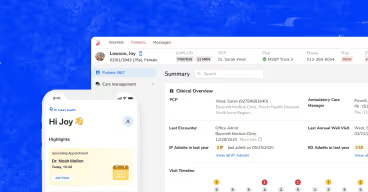

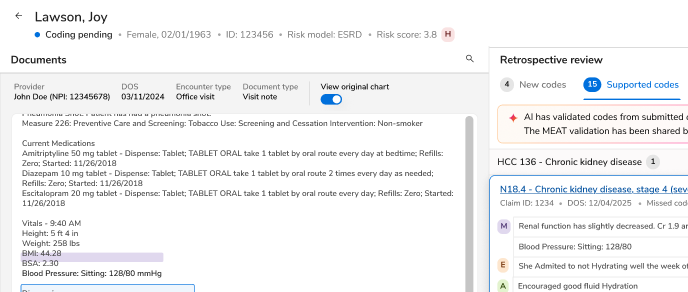

AI copilots in call centers do not take away the warmth of an agent’s human conversation; rather, they provide the agent with real-time insights regarding patients' backgrounds, preferences, and potential issues. With these reflections, each agent can tailor the interaction, demonstrating a level of understanding that is almost prophetic.

AI That Respects Patient Dignity

Creating AI systems that preserve patient dignity requires intentional design choices. It starts with recognizing that every patient interaction is an opportunity to either build or erode trust.

- Transparency matters: Patients should always know when they're interacting with AI versus a human. However, this disclosure should be done thoughtfully. Instead of cold warnings about "automated systems," successful implementations should frame AI as a collaborative tool: "I'm using our AI assistant to help ensure we don't miss anything important in your care."

- Choice remains paramount: Although AI has introduced remarkable conveniences, such as chat support available 24/7 or scheduling an appointment without having to talk to anyone, patients should always be able to speak with a human being. This is not merely a matter of patient choice; it is an understanding that health care decision-making is often a complex interplay of emotions, culture, and values that cannot be managed by artificial intelligence.

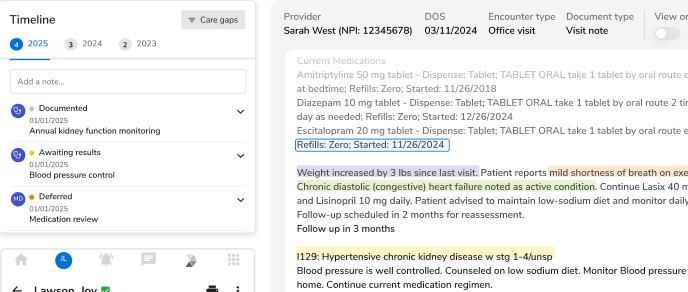

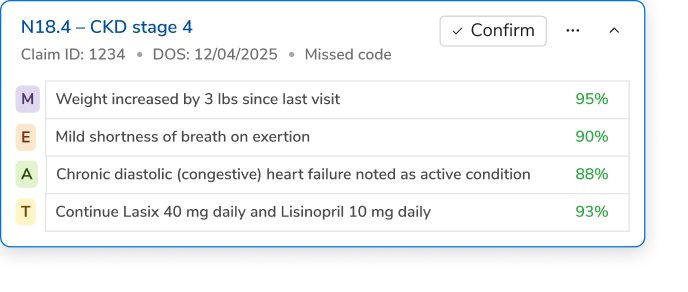

- AI should enhance personalization, not standardize it: While it's tempting to use AI for one-size-fits-all solutions, the technology shines when it helps healthcare providers understand and respond to individual patient needs. This might mean using natural language processing to detect emotional distress in patient messages or employing predictive analytics to identify patients who would benefit from proactive outreach.

Using AI to Create Time for What Algorithms Can’t Replace

Rather than posing the question of how AI can be used to make us more efficient, we should ask how AI can make us more human. The answer is that AI will not replace our human judgment with an algorithm, but rather, it can supplement it.

The leaders in healthcare tomorrow will not be those who have the most advanced system of AI, but rather the leaders who understand how to combine the technological capacity of AI with the wisdom of humanity. Leaders will understand that AI, for example, can predict which patients are likely to miss an appointment, but only humans can reason why a patient is likely to miss an appointment and also intervene with the patient when their diagnosis doesn't require those forms of intervention.

.png)

.png)

.avif)

.svg)

.svg)

.svg)